ImageNet Classification with Deep Convolutional Neural Networks “Krizhevsky et. al.‘s 2012 paper”

Introduction:

To recognize objects in realistic settings, it is necessary to use much larger training sets. In this study, ImageNet was used, which has 15 million labeled images in over 22,000 categories.

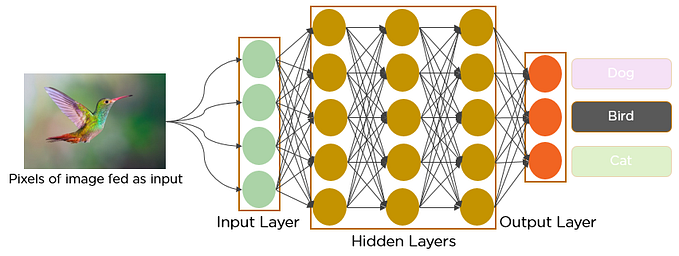

But due to the complexity of the recognition task, a large data set is not enough, using a Convolutional neural network (CNNs) is convenient because their learning capacity can be adapted, they can also make strong and correct assumptions about the nature of images and, they have fewer connections and parameters and so they are easier to train compared to other feedforward neural networks.

The model presented was trained on the subsets of ImageNet (said subset was used in the ILSVRC-2010 and ILSVRC-2012 competitions) and achieved to the moment when the paper was published, the best results reported on these datasets.

Procedures :

Pre-processing of the images:

AlexNet system requires a constant input dimensionality of 256 x 256, so they simply re-scaled their input images.

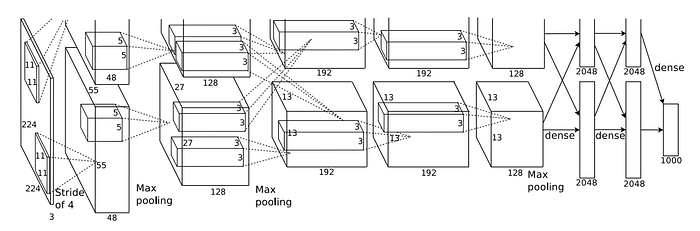

Architecture:

the network has 60 million parameters and 650 000 neurons. It consists of 5 convolutional layers, some of which are followed by max-pooling layers and 3 fully connected layers with a final 1000-way softmax activation function.

Reducing over-fitting:

Alex used data augmentation which I guess the oldest trick in the industry for this purpose ,maybe? well it’s efficient.

Also they used Dropout in the first two fully-connected layers which they found is very useful saying that AlexNet “exhibits substantial over-fitting”.

Results:

Krizhevski’s Net achieved top-1 and top-5 test error rates of 37.5% and 17.0% , comparing it to the best published results in that time, 45.7% and 25.7% respectively, that’s quite an improvement.

Conclusions:

- Deep CNNs can achieve good results on a big dataset using just supervised learning.

- It was seen that network’s performance degrades if a single convolutional layer was removed.

Personal notes:

Whenever I read an article about deep convolution network, 80% of the time, it mentioned the AlexNet in it, so, pretty much, the improvement it presents are considered to be groundbreaking achievement and merits the State-of-the-art title for that.